« Fundamentals of media

What is media

Media is a broad term, which usually means either an image or an audio or a video.

Image

- An image starts with the pixel.

- it is the smallest building block of an image.

- A pixel is usually associated with the color, which can be represented as a combination of red, green and blue values, also known as RGB, or some other format like YUV with brightness and color components.

- A pixel can also have an alpha value, which represents its transparency.

- An image is just a collection of pixels organized in a rectangle, so it has a width and height.

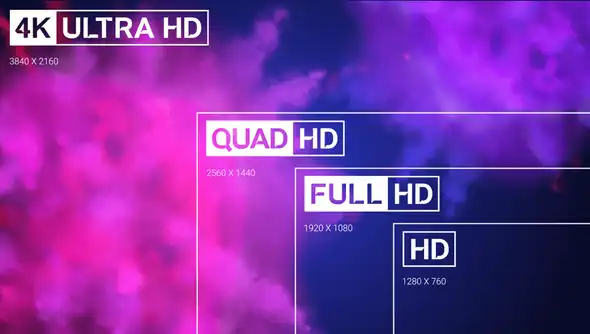

Image resolution

- Image resolution represents the quality of an image or the number of pixels in the image. It is Known as width multiplies by height. A common resolution is 1920 by 1080, which is commonly known as HD or High Definition. There are also higher resolutions like Ultra HD(3840x2160) and 4k(4096x2160).

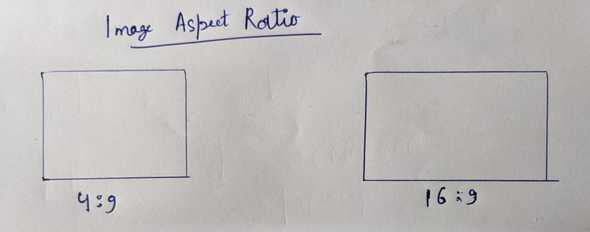

Image aspect ratio

Aspect ratio of an image is the ratio of width and height. Common aspect ratios include 16:9 and 4:3.

Audio sample

- An audio sample is a smallest representation of a sound.

- It is building block of audio.

- A sample is usually stored as 8 or 16 or 24 or even a 32 bit value. This is known as the depth of the audio.

- More bits usually imply higher quality for the audio.

Audio frequency

- Audio frequency is the sampling rate, meaning how many samples there are per second.

- Audio can be sampled in 44.1 or 48 kHz or in other frequencies.

- Higher frequency generally means higher quality.

- So audio in general means a sequence of samples that are played together.

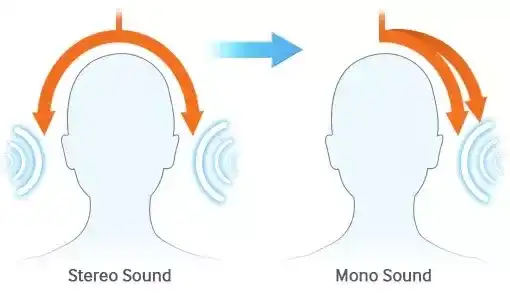

Audio channel

- An audio channel represents a single sequence of samples.

- Channels of the same audio track are usually meant to be played together.

- Channels can be organized in different channel layouts, for example, a single channel is called mono.

- Two channels are usually recognized as stereo, with one left and one right channel.

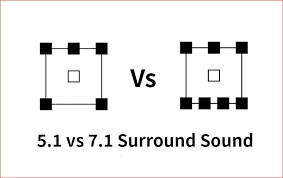

- There are also others surround sound layouts like 5.1 or 7.1.

Audio tracks

- An audio track represents a collection of channels

- There can be multiple audio tracks within a single media file, along with other tracks like video.

- Different audio tracks are used to organize different sounds that relate to the same timeline. The tracks may or may not be intended to be used together, for example, a documentary media file can have an English track and a French track which are not meant to be played together, but either of them may be mixed together with a background track.

Video

- A video is just a sequence of images like audio, a video also has a time duration.

- In the video context, each image is called a frame.

Frame rate(FPS)

- Frame rate, or FPS of a video means how many frames there are per second.

- Different frame rates originate from different television standards and applications.

Video compression

- Basically it works by removing redundant information from the raw images or frames of a video.

- There are two types of redundancies in a video.

- First, there is spatial redundancy within an image or a frame. This means that the nearby pixels of an area on 2D frame do not very much and therefore can be encoded efficiently using fewer bits without losing significant quality. The same technique is actually used in individual image file compressions as well.

- Secondly, there is temporal redundancy between each two consecutive frames of the same scene. Since usually the objects of a scene do not move much between frames, this property is utilized to compress the video further.

Codec

- The word codec comes from joining the words coder and decoder.

- It can basically mean a format or specification for encoding and decoding, or it can actually mean a piece of software library or plugin that does the encoding or decoding.

- Raw or uncompressed image, audio or video can be very big and almost always impractical to store or transfer. This is why we can compress them by encoding with the codec. So that we can consume less disk space to store it and we need less bandwidth to transfer the media.

- We need to decode the media when we eventually want to use it in some form, for example, when we want to play it back on a screen or modify it with an editor application.

- Examples of some popular video codecs are H.264 for which is probably the most widely used codec on the Internet today. H.265 is the successor to 64, which provides better compression. VP9 is by Google. These codecs are well-known for their compression while preserving good quality. There are also some other codecs which compress less but preserve higher quality and provide easier decoding for editing workflows, examples are Prores by Apple

- We have examples of audio codecs as well, PCM is one of the most popular audio formats, but it is not compressed. AAC and MP3 are examples of compressed audio formats or codecs?

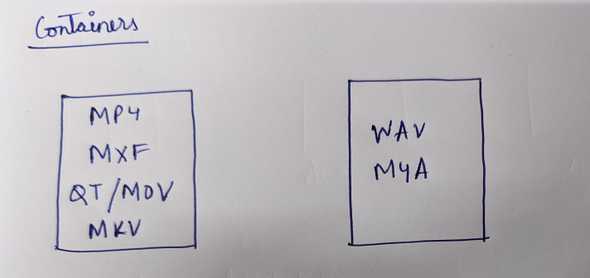

Containers

- A container is just a package, a wrapper around the media.

- It usually represents a file format and how the media data is organized in the file so that applications know how to find the right information, like how many streams there are in the file, where to find an audio track, etc.

- Everyone is familiar MP4, since that is probably the most popular container for video on the Internet. MXF is more known in the broadcast world, most high quality professional cameras record int MXF format.

- There are also some other containers, like quicktime or MOV and MKV.

- These containers can contain both audio and video.

- There are also some other containers that are for audio only materials. For example, the WAV container is very popular for storing high quality and compressed PCM audio, on the other hand, M4A is usually used for storing compressed AAC audio.

Why so many codecs and containers

- Some of the differences arise from the fact that different companies have developed different codecs and formats.

- They often focus on different problems to solve, and different codecs offer different benefits for different requirements.

- Some codecs are good for preserving higher quality for recording and editing, while others are better for compression and streaming.

- Some codecs are free while you need to pay for using some of the other codecs.

Codecs are used for compressing video and audio and containers are used to wrap them into a structured file format.

Transcoding

- Transcoding literally means going from one encoding to another

- So when we talk about transcoding media, it mean that the media is encoded using one codec and we want to convert it by encoding it with a different codec.

Why do we need to transcode

- Why convert media from one codec to another. This is because different codecs are good for different situations.

- We need to preserve good quality when we are editing and media for production, even if it takes more space to store but me, we may want to transcode it to smaller size if we want to stream it over the internet.

- Transcoding is also needed when the source media is not usable by a target application.

Transmuxing

- Means to convert the container of the media, not the codec.

- So, for example, a media player may support H.264 encoded media in MP4 for files only.

- In that case, if you have a H.264 media in an MXF file, player will not be able to read it even though it supports the codec. So, in this case, you will need to transmux the source to repackage the H.264 content into an MP4 container.

Thumbnail generation

- During a transcoding process, we often generate thumbnails which are still images from the source video.

- Thumbnails are usually small images extracted at different points of time from the video.

Frame rate conversion

- Frame rate conversion is also another thing that can happen during transcoding.

- Different television systems can require different frame-rates, and we need to be able to convert a video to a target frame rate to be able to support this.

- Some cameras can record at higher frame rates like 60 FPS, which allow to preserve quality and more details of slow motion videos. But then we may want to convert to lower FPS when we want to prepare a video clip from it or playback or streaming.

Bitrate conversion

- We can choose to change the bitrate of media during transcoding.

- Bitrate means how many bits are stored or transferred for one second of media.

- Modern cameras are able to record video in very high bitrate, which implies high quality, but it also means that more storage space will be needed to store the media and more bandwidth will be needed to stream it.

Audio volume adjustment

Transcoding works not just on video, but on audio as well. For example, we can choose to amplify the incoming audio or normalize the volume.