« Basic transcoding architecture and flow behind ffmpeg

Basic transcoding architecture and flow behind ffmpeg

We are going to understand the basic transcoding architecture and flow behind ffmpeg.

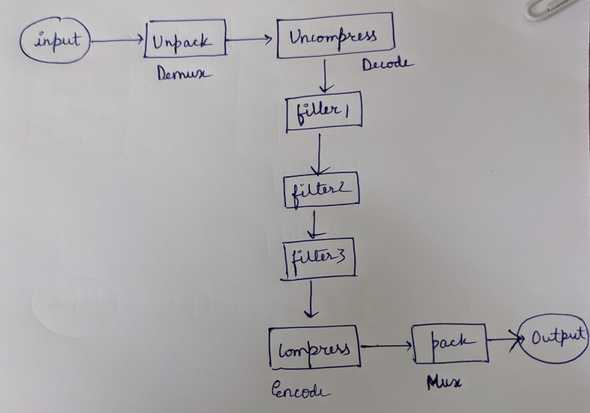

Transcoding and filters

- After reading the input it needs to be unpacked and video and audio need to be separated. Since different parts of the media need to be handled differently.

- After unpacking, we need to uncompress the video and audio in order to get the actual video pixels and audio samples.

- Then we go through a kind of a reverse process when we need to compress data using a possibly different algorithm.

- And also, we need to pack it into a possibly different format before finally writing it to the output.

- These components are also known by some other more formal names.

- Unpacking is actually known as demuxing.

- The process of compressing falls under the more general process called decoding.

- Similarly, compressing is actually known by encoding and packing is referred to as muxing.

- So after the media is read, unbaked and uncompressed, it can go through any number of filters that modify it before compressing, packing and finally outputting it.

Example flow

- The input can be a disk file, but it can also be read over network protocols like HTP or FTP. In this example, the input is in a mixed up format that has video and audio packed together into a container.

- This goes through a component called demuxer, which can understand the container format and can separate the video and audio data.

- The demuxer comes from one of the libraries inside of ffmpeg, which is called libavformat.

- The output of the demuxer is a series of encoded frames, each of which contains compressed video and audio data.

- These encoded frames then travel through a decoder coming from libavcodec, which is another ffmpeg library.

- The decoder outputs, raw image and sound data. This decoded frame can then pass through any number of filters which can modify the video and audio.

- These filters are coming from yet another ffmpeg library called libavfilter.

- They first enter an encoder, which compresses the raw data with some codec.

- Then then these encoded frames go through a muxer which packages the data into a container format.