« AOT vs JIT compilation in Java

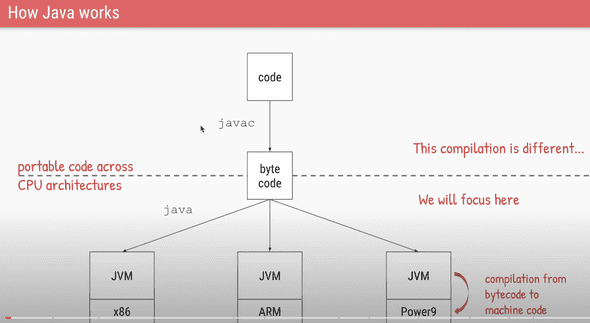

Java code is first compiled into Byte Code using the tool javac and this byte code is intermediate representation of your code which provides a portability wherein you can run the same bytecode accross different kind of architectures.

When we really this byte code when you application runs on the JVM, the JVM will convert your bytecode into the machine code and this step is also called the compilation.

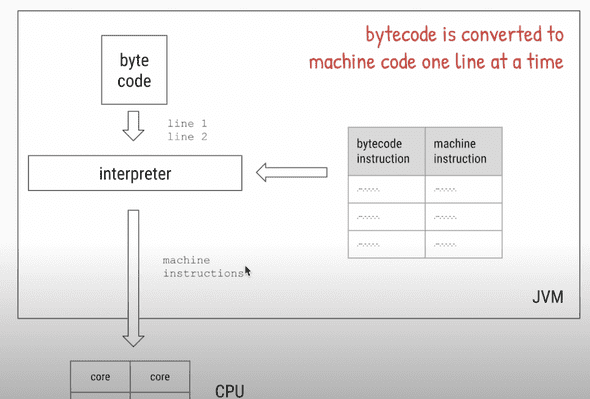

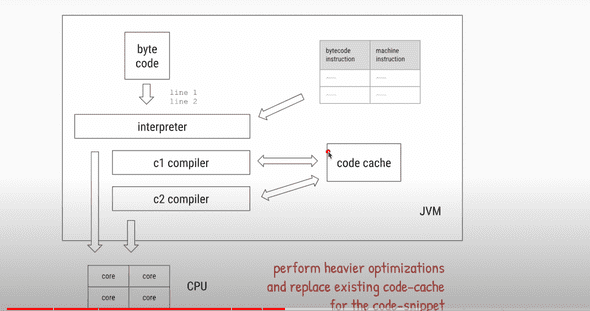

When application starts the application now has access to Byte Code, it will go one line at a time and it will use some sort of dictionary which tells it that ok you are running on x86 architecture which is an intel or AMD chip and for this kind of byte code you need to convert it to this kind of machine instruction.

So there is a component within the JVM called interpreter which will go through the bytecode one line at a time and using this dictionary it will convert bytecode into machine instructions and it will send those instructions for the CPU to execute and that is how your application starts running.

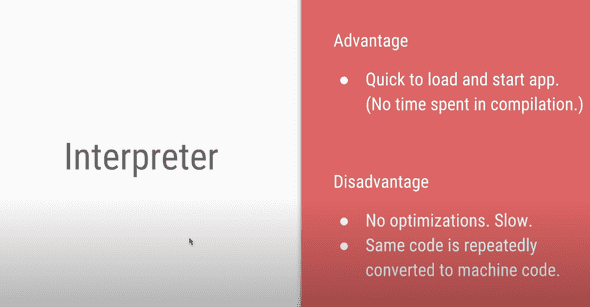

So interpreter is quick to start your application it doesn't waste time in compiling your code.

Converting each line of bytecode repeatedly into the machine code even for the repeated code snippets like loops or the methods which we are calling again and again it's very inefficient.

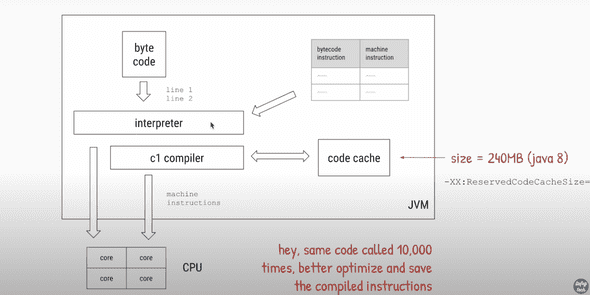

JVM keeps a performance counter for which methods or snippets of code are actually executed how many times. For example if there is a method called add and it is repeatedly called, the counter of how many times that method is called will be incremented. Once the counter reaches a particular threshold. Let's say this method add is called 10000 times from the point the application started. So that means that methods is going to be used lot more times, so its better that if we compile it and save the compiled copy once and while we are at it lets optimize the code a little so that the compiled code runs faster than the interpreted code and thats the job of this c1 compiler.

c1 compiler will take the snippets of code which have been run 10000 times by the interpreter, it will compile it optimise it and it will save that compiled machine instruction into this area of this JVM called code cache. So next time this method is called instead of the interpreter doing this conversion we will pick up the compiled optimized code from the code cache and give those machine instructions to the CPU to run. So now that part of your code has become faster.

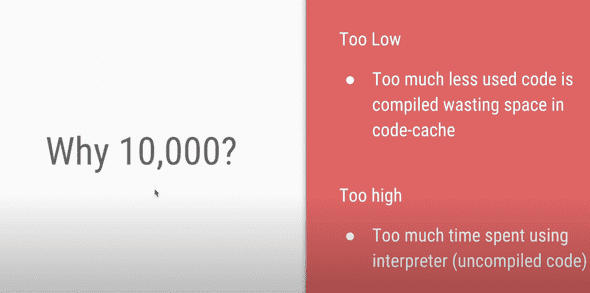

Choosing this performance counter threshold to be 10000 has certain trade offs. So, it we keep is counter too low lets say 10 then every time a method or snipped of code has run only 10 times we will use this c1 compiler and will optimize the code. The problem here is code cache is very small only 240mb so even the parts of the code which are used very infrequently even they will be optimized and we will be wasting lot of CPU and resources in optimizing the code and this 240mb code cache will be filled very quickly.

Application keeps running for a while and in the background JVM will start collecting runtime statistics of how your code is executed and this is also called code profiling and it will try to create control flow graphs or code parts of your code so to find hottest parts of your code which is always executed. Once it has enough statistics that yes this is the control flow, this is the code path that is always used in the application, it will ask this other compiler c2 compiler to perform heavier optimizations on that part of the code.

c2 compiler will perform heavier optimizations and all the corresponding compiled code or machine instructions will be also be kept in the code cache. If the same method is compiled by c1 it will be replace that c1 methods machine instructions based on its own output.

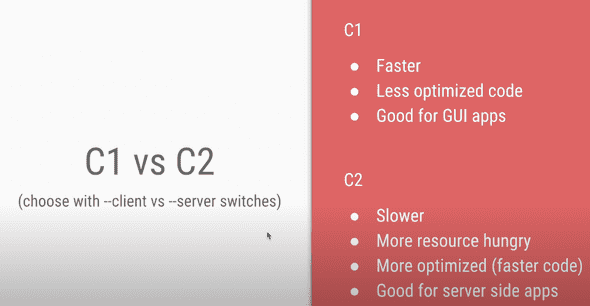

In java 7 and before we could choose which compiler do we want, do we want client compiler i.e. c1 compiler or server compiler i.e. c2 compiler. c1 does not consumes too many resources and it is faster to compile but it does less optimizations. c2 compiler is opposite. It is slower and more resource hungry but it will do heavier optimizations and it will create faster code. So for GUI applications c1 was used and for server which is long running applications c2 was used.

In java 7 there was option to use both of them together and in java 8 that became the default behavior so now we have both the compilers running simultaneously.

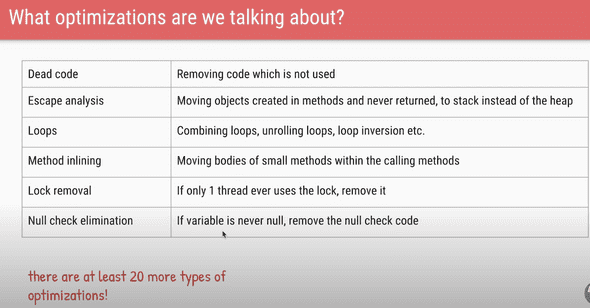

If some part of your code is never executed at all or not used at all or not used at all, they are removed for the compilation. If there are objects created in the method and they are never returned then they are assigned to the stack rather than assigning it to the heap so that garbage collection can be faster and these are only subset of the optimizations.

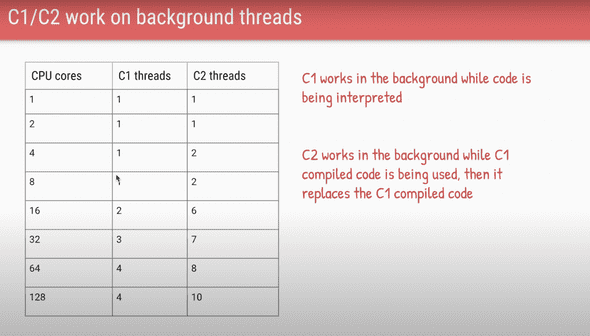

Whole flow of profiling the code and finding hot parts of the code which are most frequently used and only compiling them and optimizing them further is the very reason why oracles VM is called hotspot VM. These c1 and c2 compilers they don't stop the compiler from running. So based on the number of cores it will decide how many background threads to create for both c1 nd c2 and while the code is being interpreted behind the scenes compilation can go on and once the compiled code is ready VM will execute the code from the code cache instead of asking the interpreter to do it.

All these compilations and interpretations are being done at runtime so while your application has started and is running only then you are doing the compilation and that is why the name JIT(just in time compilation)

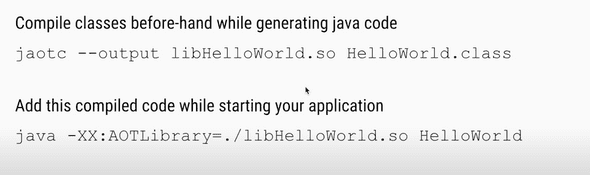

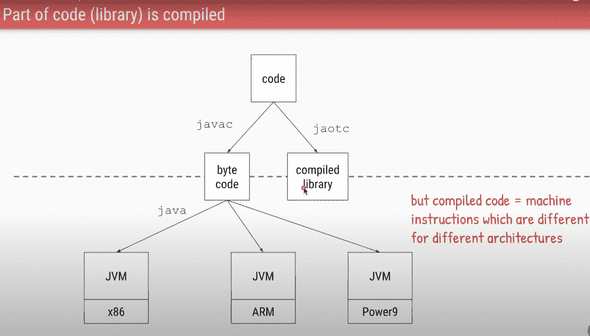

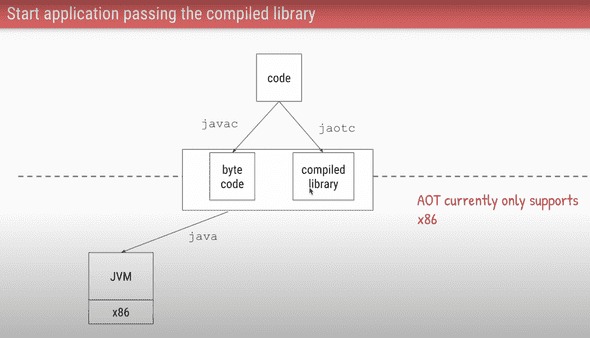

The question comes can't we do this AOT(ahead of time). Can't we do that while we are converting our code to bytecode. From Java 9 we have this option of converting some of your code or some of your libraries into the compiled code before even we run our application. Just like javac we have this executable which will convert bytecode into compiled code into .so file then whenever we want to run any of our classes then we have to pass this .so file

Bytecode is portable it can run accross multiple architectures but when you compile it that means you are converting your bytecode into the machine instructions of a particular architecture so how can this compiled library be cross-platform and the answer is it can not be and as of java 9 only supports architecture x86 so our compiled library will only run on this architecture. So our runtime library changes a little bit

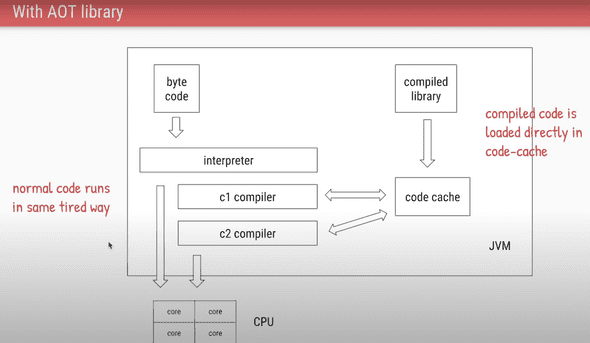

Now in our runtime anything loaded as bytecode will will continue to run (it will have interpreter , c1 and c2). Any of the compiled libraries that are loaded that will be directly added to your code cache will

So we use bytecode as it is portable across architectures. The code is initially interpreted line by line and after some threshold of 10000 times of execution it is converted to machine code. Based on some runtime analysis or code profiling the code is further optimized by c2.