« Android Runtime with Profile-Guided Optimizations

Android Runtime with Profile-Guided Optimizations

Recent conversations around Android Runtime Optimizations has to do with a technique known as Profile-Guided Optimization. (or Profile-Directed Feedback) The idea behind it is that the software engineer can consider various code paths a user would typically go through and pre-compile these paths instead of leaving it to the JIT compiler.

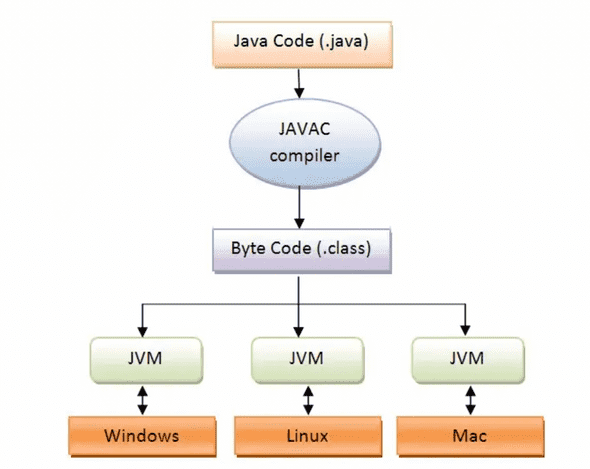

What makes Java interoperable across various OS and CPU architectures is because of the JVM. How Java compilers work is that they convert the Java source code into bytecode. The bytecode is then interpreted by the JVM and is converted to machine code depending on the OS and CPU architecture it runs on. Below is how the whole process looks like:

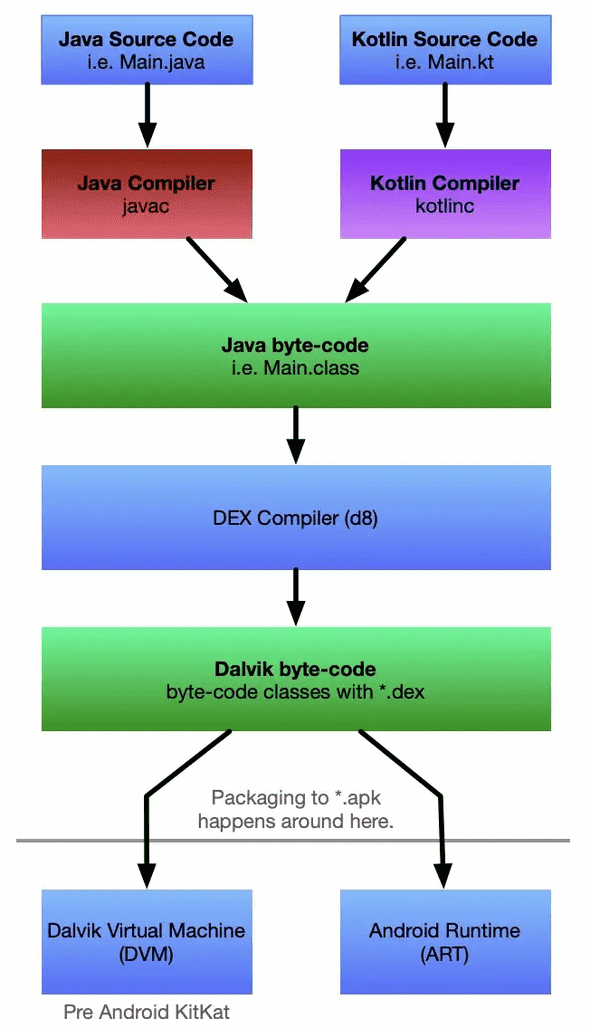

An Android engineer might know by now that Android does not use JVM. Because Android runs on memory and space-constrained devices, Dalvik is used in its stead (and since Lollipop, ART) Below is the modified Android compilation and code execution path:

AOT

If JIT compiles the code at runtime, wouldn’t it be slow?” The answer is yes. JIT sacrifices performance over storage. Of course, a counterproposal has to be raised. At a time when storage is now so cheap, can we then optimize for performance over storage? Those who thought such would then flock to Ahead of Time Compilation (AOT). In this strategy, Java pre-compiles various parts of the code so that the JIT doesn’t have to take that responsibility. The drawback of course is that because AOT code has to be executed across various operating systems and CPU architectures, all of these pre-compiled machine code has to come together under the same java package, thereby bloating up the file size.

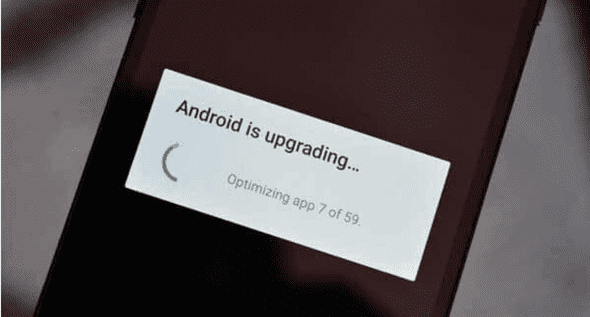

This is not a problem when the OS is not often updated. However, because Android and its OEMs regularly patch their OS, precompilation has to be done every time there’s an update. This leads to a significant amount of wait time for users who have just updated their phones.

Crowdsourced Data

Now let’s go back to JIT for a second. More modern implementations of JIT comes with an execution counter so that it knows what part of the code path to pre-compile, hence improving performance. This of course is based on how the individual user interacts with the application. Now, suppose the JIT has access to the cloud, such that it could crowdsource the code paths used by various users around the world. Could it, in theory, pre-optimize the execution for the user? The answer is yes. Now that the cloud is widely available, JIT can rely on crowdsourced data on the cloud to make apps run even faster. For Android, Google calls this Cloud Profiles.

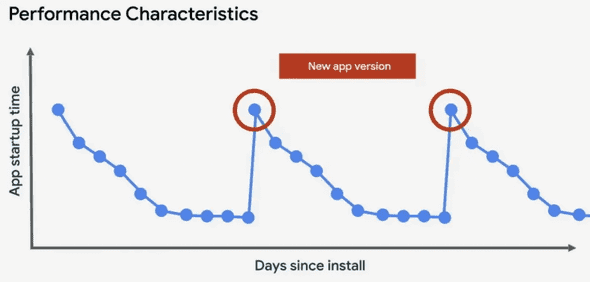

Cloud Profiles, however, has one problem. While they excel at identifying execution paths, they are only as good as the lifespan of the app version. When the app is updated, Cloud Profiles for the previous app version are rendered useless as shown below

This is because Cloud Profiles won’t have data about how the new app version is executed. Hence, is unable to pre-optimize for early-access users. Of course, this will taper over time, but the problem remains for early adopters, and if the company does weekly deployments, such as Twitter and Netflix, the benefits of Cloud Profiles are diminished.

Profile Guided Optimizations

Recently, Android introduced Profile Guided Optimizations through the use of Macrobenchmarking and Baseline Profiles. This is to solve the problem mentioned above. In essence, the idea is to find the right balance between JIT and AOT so that only the code paths the software engineer thinks users often go to are optimized. If the AOT codes come shipped with the app, then one can find the right balance between having just the right AAB file size and also having the app run as smoothly as it can be. But there’s one problem.

Consider a app, which surfaces various home screens depending on which country you’re in. Here, Profile Guided Optimizations lose their appeal. Because now, shipping profiles tied to each country tends to be sub-optimal. Moreover, when the use case branches out into various features users might use in a super app, the value of Profile Guided Optimizations quickly diminishes.

AOT tends to be almost always suboptimal compared to JIT because it relies on highly specific code paths which may not always be true in actual usage.

Given enough time, JIT will always outperform AOT since it has a larger pool of data to use. Moreover, one does not have to predict the range of code paths users will go through prior to them actually going through them.